Path: blob/main/examples/idefics/finetune_image_captioning_peft.ipynb

7363 views

IDEFICS: A Flamingo-based model, trained at scale for the community

Finetuning Demo Notebook:

Credit: Flamingo blog

This google colab notebook shows how to run predictions with the 4-bit quantized 🤗 Idefics-9B model and finetune it on a specific dataset.

IDEFICS is a multi-modal model based on the Flamingo architecture. It can take images and texts as input and return text outputs but it does not support image generation. \ IDEFICS is built on top of two unimodal open-access pre-trained models to connect the two modalities. Newly initialized parameters in the form of Transformer blocks bridge the gap between the vision encoder and the language model. The model is trained on a mixture of image/text pairs and unstrucutred multimodal web documents. \ The finetuned versions of IDEFICS behave like LLM chatbots while also understanding visual input. \ You can play with the demo here

The code for this notebook was contributed to by Léo Tronchon, Younes Belkada, and Stas Bekman, the IDEFICS model has been contributed to by: Lucile Saulnier, Léo Tronchon, Hugo Laurençon, Stas Bekman, Amanpreet Singh, Siddharth Karamcheti, and Victor Sanh

Install and import necessary libraries

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 519.3/519.3 kB 6.3 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 115.3/115.3 kB 6.7 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 194.1/194.1 kB 9.5 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 134.8/134.8 kB 8.5 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 268.8/268.8 kB 10.1 MB/s eta 0:00:00

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing metadata (pyproject.toml) ... done

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 7.8/7.8 MB 17.3 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.3/1.3 MB 31.5 MB/s eta 0:00:00

Building wheel for transformers (pyproject.toml) ... done

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 92.6/92.6 MB 11.2 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.3/1.3 MB 67.7 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 244.2/244.2 kB 25.2 MB/s eta 0:00:00

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing metadata (pyproject.toml) ... done

Building wheel for peft (pyproject.toml) ... done

Load quantized model

First get the quantized version of the model. This will allow us to use the 9B version of Idefics with a single 16GB gpu

If you print the model, you will see that all nn.Linear layers are in fact replaced by bnb.nn.Linear4bit layers.

Inference

Let's make a simple method to test the model's inference

Let's run prediction with the quantized model for the image below which pictures two kittens. \

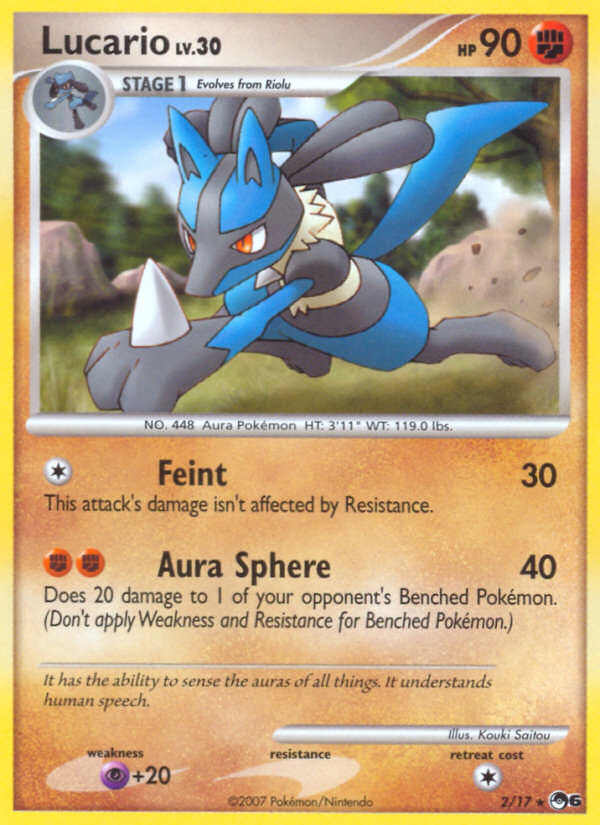

Now let's see how the model fares on pokemon knowledge before we try to finetune it further. \

Finetuning dataset

Prepare the dataset that will be used for finetuning

LoRA

After specifying the low-rank adapters (LoRA) config, we load the PeftModel using the get_peft_model utility function

Training

Finally, using the Hugging Face Trainer, we can finetune the model!

For the sake of the demo, we have set the max_steps at 40. That's about 0.05 epoch on this dataset, so feel free to tune further!

It has been reported that fine-tuning in mixed precision fp16 can lead to overflows. As such, we recommend training in mixed precision bf16 when possible.

Push your new model to the hub!

_| _| _| _| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _|_|_|_| _|_| _|_|_| _|_|_|_|

_| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

_|_|_|_| _| _| _| _|_| _| _|_| _| _| _| _| _| _|_| _|_|_| _|_|_|_| _| _|_|_|

_| _| _| _| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

_| _| _|_| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _| _| _| _|_|_| _|_|_|_|

A token is already saved on your machine. Run `huggingface-cli whoami` to get more information or `huggingface-cli logout` if you want to log out.

Setting a new token will erase the existing one.

To login, `huggingface_hub` requires a token generated from https://huggingface.co/settings/tokens .

Token:

Add token as git credential? (Y/n) Y

Token is valid (permission: write).

Cannot authenticate through git-credential as no helper is defined on your machine.

You might have to re-authenticate when pushing to the Hugging Face Hub.

Run the following command in your terminal in case you want to set the 'store' credential helper as default.

git config --global credential.helper store

Read https://git-scm.com/book/en/v2/Git-Tools-Credential-Storage for more details.

Token has not been saved to git credential helper.

Your token has been saved to /root/.cache/huggingface/token

Login successful